Gotta load fast! Speeding up this blog

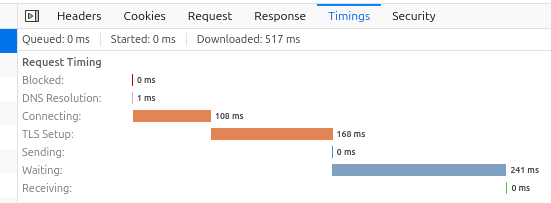

I’ve been hosting this blog on GitLab Pages for the past eight years or so, and it’s been working great — no real issues. Well, there is one “problem.” The page loading speed isn’t exactly slow . . . but it’s not fast either. Opening the dev tools reveals that the main culprit is latency. GitLab Pages are hosted in the United States, while I’m based across the pond in Germany. This means the round trip to set up a connection (TCP handshake and such) and deliver the initial HTML document takes about 400–600 ms. The HTML document itself contains other files that need to be fetched from the server like style sheets and javascript. These can only be requested once the HTLM document is fully transmitted and parsed1. Once the initial request is fulfilled we can skip the TCP setup however other resources still need to be transmited, parsed and finally rendered. As a result the page is fully rendered in just about a second. That isn’t terrible, but it doesn’t feel fast either. Given that almost no one is reading this blog, it’s probably fine. But I want it to feel fast, so let’s see what can be done.

Reducing latency

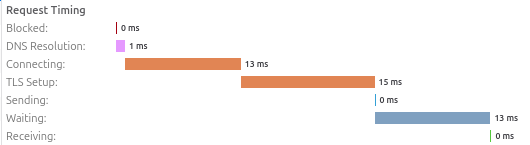

First, let’s do a sanity check to confirm that latency is actually the issue. For comparison, I set up a virtual machine with Hetzner, which is hosted in Germany. Using Nginx and Let’s Encrypt, I get round-trip times of around 10–15 ms. We can further test this with a VPN. If we connect to an endpoint in the US, there’s minimal overhead when connecting to GitLab Pages directly. However, connecting to the server in Germany incurs significant overhead, as the traffic has to first go through the US endpoint and then back to Germany. Reducing the physical distance between me and the hosting provider seems to be the main culprit.

Of course, I don’t want to pay €5 per month for a virtual machine just to host this website closer to me. So, I started looking for alternative (free) hosting options closer to Germany. I looked into a number of alternative but Cloudflare Pages seemed easy to use. Also they advertise a global network of edge locations that cache content, promising low latency worldwide. Which sounds like exactly what we need to solve our latency problem. So I registered. Connection with my GitLab repository where the code for this block is stored was easy. They even have a online build system for static websites ready. Even better the built system I’m using hugo is also supported. After setting up a DNS record everything worked more.

But did that actually solve our latency problem? Kinda. Initially my testing showed inconsistent results. Sometimes the latency was great, but other times it reverted to the same timing I experienced with GitLab Pages. I suspect the low traffic made it (at least initially) difficult to predict which edge locations are relevant to this website. However it took a few days for latency to really come down and anyways was better than low latency? No latency!

Eliminating latency

The first request and the initial page load will always be influenced by server latency. However, we can eliminate latency for subsequent user interactions by prefetching the HTML documents for posts the user might want to visit next. While the user is on the homepage I monitor with posts are currently presented to the user. Then I simply prefetch all posts that are visible. As a result as the user scrolls, newly visible posts are also prefetched. This ensures that if the user clicks on a post, it is already fetched and ready to render.

Thankfully, there’s an HTML attribute designed for this: prefetch. Unfortunately, not all browsers honor it. So, as with many things on the web, we need a workaround to make it work smoothly. We check if the browser supports prefetching (thanks Krampstudio). If it does, we use prefetch; otherwise, we fall back to manually fetching the content. This isn’t perfect, as it initiates the request immediately, potentially slowing down other requests needed to render the current page. Still, I’d rather wait an extra second upfront and have everything run smoothly afterward.

function prefetch(url){

if ( support('prefetch')) {

const link = document.createElement('link');

link.rel = 'prefetch';

link.href = url;

link.as = 'document'

document.head.appendChild(link);

} else {

fetch(url);

}

}

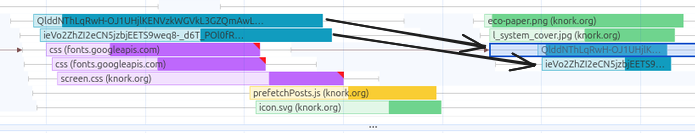

Okay great we got the browser to request documents and resources that we’ll need in the future. However we also need to make sure that the browser actually stores these resources. In other words we need to ensure that the browser is actually caching the documents. Otherwise pre-fetched files will be immediately discarded. Then once that file is actually needed it will be fetched again. This is illustrated by this wonderful diagram where we are (pre)-fetching the same font and then loading it again once the stylesheet requests it.

We need to tell the browser that it should cache these files. However the caching behavior is entirely controlled by the server that returns the files. More specifically by the response header that is sent by a server. Cloudflare wants to make sure that if we make changes to our website they are displayed immediately to the user. So they include the following in the response head up by default cache-control public, max-age=0, must-revalidate. Fortunately, Cloudflare Pages allow us to customize the response headers by adding a _headers directive. Gitlab pages on the other hand set caching to default value of 600s for most files2.

Finally we simplify CSS files for the website and only conditionally load styling and javascript for pages that actually need them. For example, I am using mathjax to display latex formulas. However, not all posts actually contain Math. So for post like this one, where there are no formulas, we don’t need to load or execute mathjax.

And that’s about it. The page is loading significantly faster and I hope that it also feels more responsive now.

1. We can nowadays use early hints to send a preliminary (101) HTTP response ahead of the requested document that indicates resources that should be preloaded. However these require some control over the server which we don’t have if we are using simple static site hosting. ↩

2. For some reason font files like .woff2 seem to be excluded from this caching policy. In general it’s probably preferable to load fonts from google directly. This way there is a chance that the user has loaded this particular font before on another website and that it’s still available in the browser cache. Turns out that google explicitly sets the response header cross-origin-opener-policy same-origin; and as such the font file will not be shared between different origins.

↩